In the rapidly expanding artificial intelligence (AI) and machine learning (ML) landscape, the success of organizations hinges on seamlessly deploying models into production for scalable AI solutions. In this sphere, MLOps — the fusion of ML and DevOps — has emerged as a transformative methodology for streamlining the life cycle of ML models. Integrating generative AI (GenAI) and the innovative LlamaIndex framework, MLOps enables the efficient deployment of GenAI solutions, ensuring enhanced speed, accuracy, and cost-effectiveness. Let’s delve into how it all comes together.

What Is MLOps?

MLOps (machine learning operations) is a set of practices and principles designed to streamline the end-to-end machine learning life cycle. MLOps integrates the development of ML models and their deployment process, standardizing the process and facilitating effective version control, continuous integration and delivery (CI/CD), monitoring, and infrastructure management of these models in production environments.

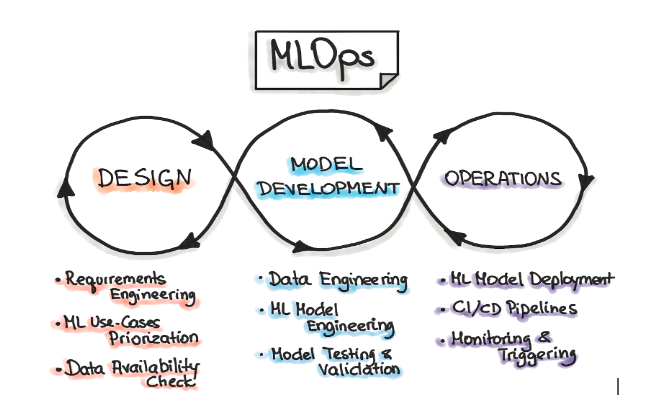

The following image shows the iterative-incremental process in MLOps:

Figure 1. Iterative-Incremental Process in MLOps

Source: MLOps Principles

To establish a complete MLOps life cycle for your ML projects, you should include the following components:

- Feature store: This component stores preprocessed, cleaned, and labeled data — along with significant variables — within the project. It ensures that this data remains traceable and accessible in a central feature store.

- Data versioning: Data versioning is crucial for tracking the data used in predictive models. It allows you to maintain a record of data versions, ensuring repeatability and reproducibility of experiments.

- Model versioning: This aspect involves versioning the trained models used for prediction. It allows you to track hyperparameter modifications and adjustments made to the models during experiments. It works in conjunction with data versioning and provides a comprehensive view of the data and models used, facilitating performance evaluation.

- Model registration: Serving as a repository, the model registration component connects model versions, data, and performance metrics. It provides a structured overview of your models, their evolution, and their associated data.

- Model monitoring: This component charts the performance of models over time. Monitoring model efficiency enables the identification of trends and deviations in performance.

- CI/CD: CI/CD automates the link between model monitoring and model training and deployment. It requires tracking of performance changes in the production model. Additionally, it automates model deployment in conjunction with code versioning, driven by performance indicators.

What Is Generative AI?

Generative AI focuses on generating new content — such as music, images, text, and even video — that is similar to what a human would produce. It leverages techniques and models from supervised and unsupervised learning, adding a layer of creativity based on existing data patterns to generate content. Some popular GenAI models include:

- Pix2Pix: Pix2Pix is an image-to-image translation generative adversarial network (GAN) that maps input images to desired outputs. It performs tasks such as turning sketches into realistic images or colorizing grayscale images.

- GPT-3 (Generative Pre-trained Transformer 3): OpenAI’s GPT-3 can generate coherent and contextually relevant text across various tasks, from language translation to text completion.

- Seq2Seq (Sequence-to-Sequence): Seq2Seq models consist of two recurrent neural networks (RNNs) — an encoder and a decoder — which can perform tasks such as machine translation, text summarization, and conversation generation.

What Is LlamaIndex?

LlamaIndex is a data framework that enables you to connect your data sources to large language models (LLMs). It works by breaking down your data into text chunks called “nodes” and indexing them through different types of indexes (managed by the framework). Those indexes consist of alternative lightweight structures in the form of embeddings or vectors that can reduce the source size, all while keeping critical layers of information related within the nodes.

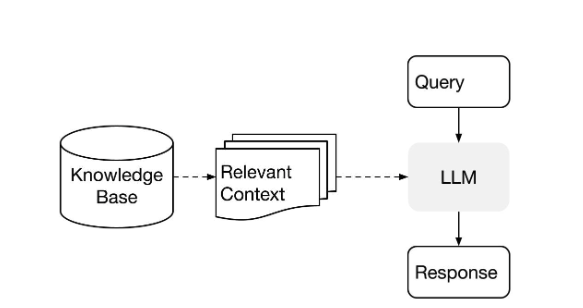

LlamaIndex uses retrieval augmented generation (RAG), a paradigm for augmenting LLMs with custom data. In turn, LlamaIndex allows for the passing of relevant context and a prompt to an LLM to query data, as shown in the following image:

Figure 2. RAG Usage in LlamaIndex

Source: LlamaIndex High-Level Concepts

To implement LlamaIndex, you must consider its two main stages:

- Indexing: Prepares the data context and transforms it. The steps of this stage are:

- Data is ingested into LlamaIndex.

- LlamaIndex breaks the ingested data down into text chunks called “nodes.” Each node contains a piece of text and metadata about the text.

- LlamaIndex indexes the nodes by creating a mapping between each node and a vector representation of the text. The vector representation is a numerical representation of the text that can be used by the LLM to understand the meaning of the text.

- Querying: Retrieves relevant context to assist the LLM in responding to a question. One step of this stage is:

- After nodes are indexed, end-users can start asking questions. LlamaIndex uses the index to find the most relevant nodes when end-users ask questions. The LLM answers these questions by combining the information from the nodes.

How Can You Implement MLOps in a GenAI Solution Using LlamaIndex?

MLOps depends on important elements that involve code and ML models. It’s crucial to handle the code responsible for invoking models in GenAI solutions. In software development, frameworks like React and Django are designed to simplify typical tasks. GenAI specifically relies on LLMs to predict structured natural language text. For these cases, LlamaIndex was created to streamline typical tasks for such models, making it highly beneficial for implementing these toolsets.

LlamaIndex itself is not enough to create a full MLOps solution for GenAI, but it can be a central component in projects that aim to develop a reliable tool.

Here’s an example implementation of MLOps components using LlamaIndex:

- Feature store: LlamaIndex standardizes the extraction of indexes from LLMs, transforming them into another data source.

- Data versioning: Index storages can be integrated with versioning tools like DVC and Pachyderm, enhancing data version management.

- Model versioning: LlamaIndex adapts model classes from sources like HuggingFaces and LangChain, supporting model versioning via compatible plugins. Hyperparameters and templates, such as prompts and temperature, can be managed using LlamaIndex’s standard classes.

- Model registration: While tools like MLflow, Sagemaker, and VertexAI excel at this, LlamaIndex facilitates connections to feature stores and model versioning.

- Model monitoring: LlamaIndex structures offer storage for metrics and performance evaluations, complementing tools like MLflow.

- CI/CD: Model serving can align with code versioning through tools like GitHub, Docker, and Kubernetes. LlamaIndex simplifies code implementation with prebuilt tasks needed for LLM interaction.

How Can You Benefit from Using MLOps in a GenAI Solution?

By incorporating MLOps into a GenAI solution using a framework such as LlamaIndex, you can achieve the following:

- Enhanced efficiency: With MLOps, you can streamline the GenAI development process, reducing time-to-market for AI applications and enabling faster innovation.

- Improved scalability: You can leverage MLOps automation for seamless AI model scaling, accommodating increased data and user demands. This aligns well with GenAI’s capacity to generate abundant content for evolving needs.

- Better monitoring of your GenAI solutions: MLOps supports the continuous monitoring of model performance, which helps you to make informed decisions on model updates and enhancements.

In Conclusion

MLOps, GenAI, and LlamaIndex make a powerful trifecta for unlocking the full potential of AI deployment. By embracing and integrating the MLOps methodology with GenAI solutions, organizations can achieve more rapid innovation, enhanced creativity, and improved efficiency in their AI initiatives.

At Wizeline, our expert Data & AI teams excel in implementing GenAI solutions with MLOps and enhancing the deployment process. Contact us today at consulting@wizeline.com to learn more about our expertise and gain access to tips that can help take your business operations to new heights.