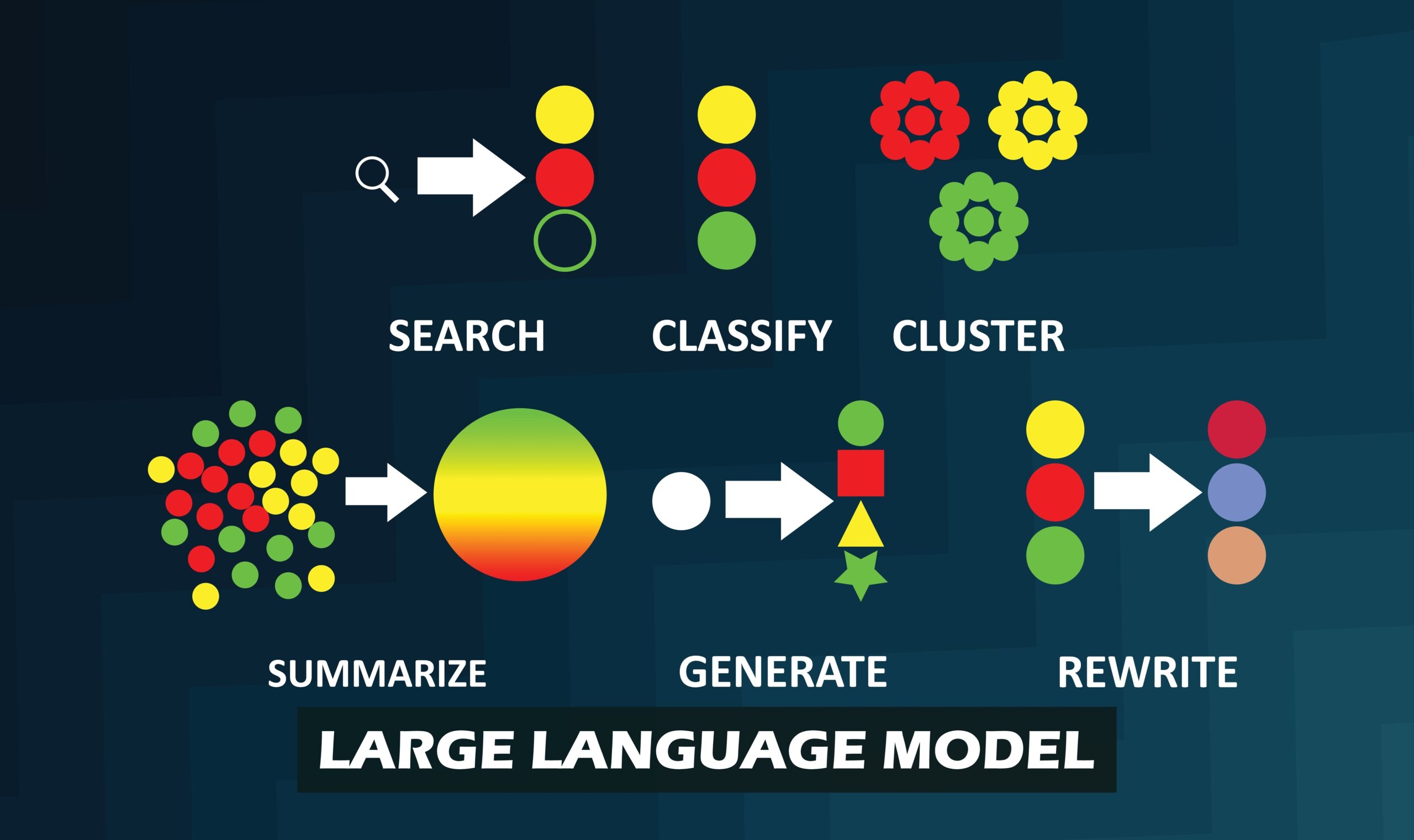

Generative AI (GAI) tools are growing in popularity, with various applications to generate text, art, music, and images. Large Language Models (LLMs) are among the most popular GAI tools, which generate the next best word and sentences given a certain input or prompt.

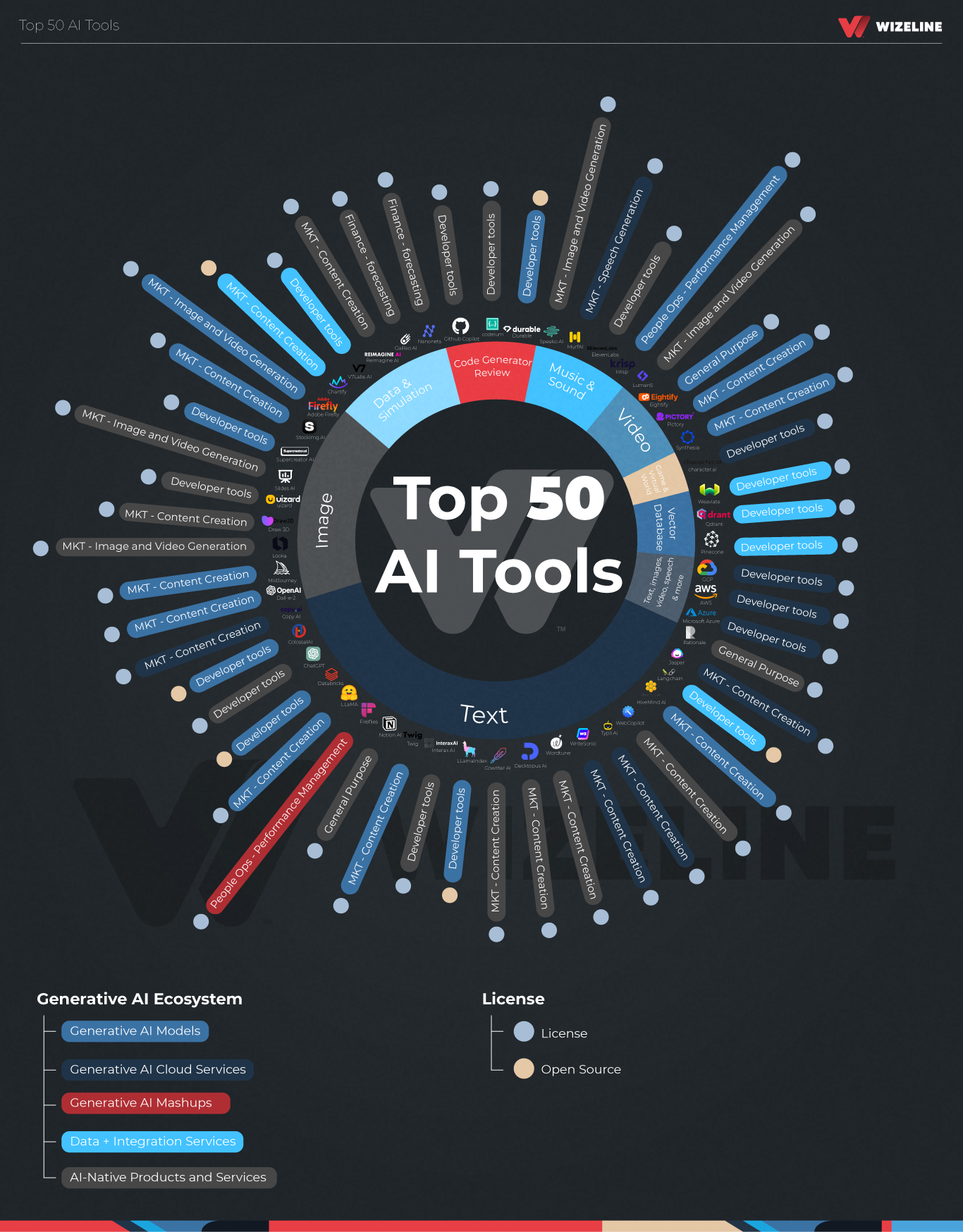

The following chart shows some of the most popular LLMs that exist in 2023, and among them is the well-known ChatGPT from Open AI.

However, LLMs also pose significant risks and threats, which can be categorized into four major areas.

LLMs may provide wrong answers

LLMs may provide incorrect answers as they rely solely on their training data, which may contain contradictory or inaccurate information. While LLMs are very clever tools for guessing which word, sentence, and paragraph should follow a certain input, they don’t know what they are discussing. Therefore, it is essential to review their outputs as they are not 100% reliable.

LLMs may be biased

As said before, the answers cleverly engineered by these models are as good as their training data, which, aside from its quality, can also be biased, particularly on subjective topics such as religion, politics, and ethics. These biases may result in the model’s answers not being inclusive or not considering content with different viewpoints. Therefore, its answers may not be inclusive or may not consider content with different points of view either by ignorance of the training team or deliberately.

LLMs may infringe copyrights

One of the main concerns is the ethical implications of using these tools, particularly when it comes to the authenticity of generated content. LLMs use a big variety of sources that are not always undisclosed and may pay little or any attention to the nature of the information they use. Furthermore, these sources may be authorized to publish certain materials, and their use for training AI models may be in a gray area since this use case may not have been considered at the time it was produced or published.

LLMs may be used for malicious purposes

Last but not least, LLMs may be used maliciously to generate appealing targeted messages for phishing and scamming purposes and could also complicate spam detection. While some of these tools have self-censorship mechanisms, they may be circumvented with prompt reverse-engineering techniques.

Looking Ahead

Aside from these risks, there are other areas of improvement that need to be considered as well, such as the enormous carbon footprint that these tools have given the large amounts of storage capacity and computing power that they require.

Several voices are expressing the need to put a “pause” in the development of these Generative AI tools, because it is important to design and enforce appropriate regulations, safeguards, and best practices to mitigate these risks. However, it is likely that these measures will lag behind, as has happened with other technologies.

In the end, I believe that the evolution of these tools may be more market-driven, as has been the case with other technologies. So, to deliver value more efficiently and less risk-pronely, LLMs will tend to specialize in domain-specific niches.

These will allow more effective control of the origin and quality of the training data and will provide more tangible, focused value for their owners and users. The provision of domain-specific data for AI training purposes is becoming a new market on its own, but this is a topic for another post.

At Wizeline, our expert Artificial Intelligence and Data teams can help you make the most out of cutting-edge advancements in Generative AI. Contact us today at consulting@wizeline.com to learn more and get access to prompt tips that can help boost your business operations.