Spatial AI is a rapidly growing field that focuses on integrating artificial intelligence and spatial data. Spatial AI extracts insights from spatial data, understands patterns and relationships, and makes predictions and decisions based on that information. It has various applications, from autonomous vehicles to city planning and environmental monitoring. Spatial AI has the potential to revolutionize many industries by enabling more efficient and effective decision-making based on spatial data.

On the other hand, extended reality (XR) is an umbrella term that encompasses a range of technologies that blend physical and virtual environments, including virtual reality (VR), augmented reality (AR), and mixed reality (MR). Spatial AI can enhance the capabilities of XR technologies by providing them with context awareness and spatial intelligence. This can include identifying objects in the user’s environment and integrating them into the XR experience or adjusting the XR experience based on the user’s location.

Additionally, spatial AI algorithms can improve XR applications’ accuracy and stability by precisely tracking the user’s movements in the physical environment. This can be particularly helpful in applications like remote assistance, where users may need to share their physical environment with a remote expert.

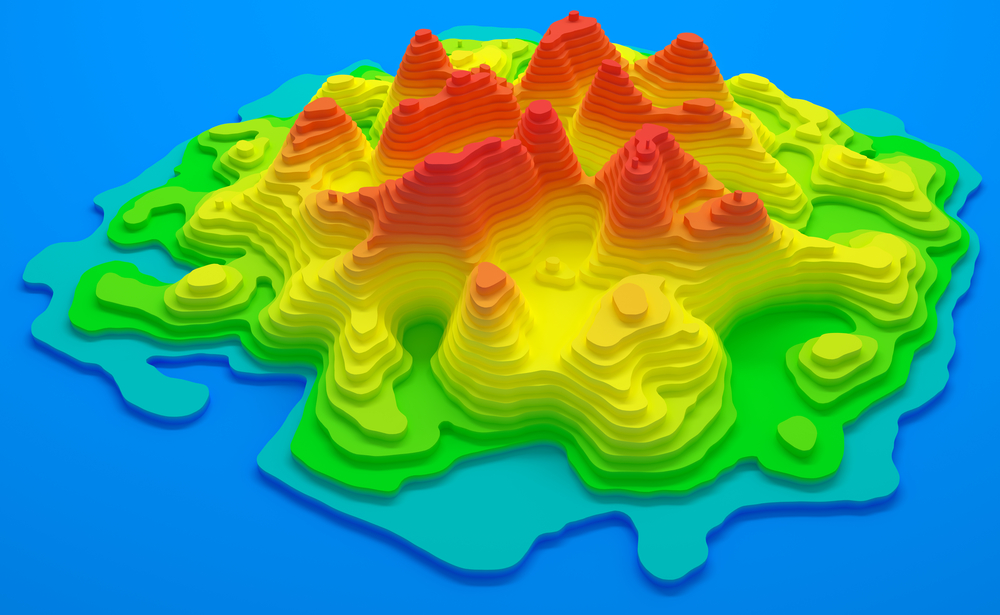

Another area where spatial AI and XR intersect is in creating 3D models of physical environments. Spatial AI algorithms can extract spatial data from images or videos of a physical environment, creating accurate 3D models. These 3D models can be used in XR applications to provide a more immersive experience or to simulate different scenarios.

AI is the next big integration for XR. As the Into the Metaverse article discusses, AI will power up XR technologies by leaps and bounds. Simply put, AI makes XR devices go from spatial displays to context-aware devices. While our ordinary mobile devices only have cameras active at certain times, XR devices must have cameras on at all times for the location and reconstruction algorithms to work.

AI can give these XR devices an edge, for example, in this GPT-4 implementation, where the author connected his iPhone camera to ChatGPT. The AI learned what a keto diet is, identified food in the fridge, and searched for a recipe with the found ingredients. Imagine this in an AR headset like Snap Inc’s or what Apple is bringing to the table later this year. The prospect of context-aware systems is nearer by the day, thanks to AI!

You can also find cases where XR helps AI. For example, this body measurements estimation algorithm uses a couple of pictures of a user, height, and weight to estimate the user’s measurements. With this implementation, we can recommend clothing items that best fit when buying online. This approach can reduce returns, the environmental impact and improve the UX (user experience). In this scenario, XR can improve the UX and results by guiding the user on the correct pose for the pictures. While AI is the main actor in this use case, XR can help increase the adoption of these technologies.

At Wizeline, we are researching AI approaches that can integrate with XR, and we have some awesome findings.

Neural Radiance Fields (NeRFs)

Let’s begin with Neural Radiance Fields (NeRFs). NeRFs is a method that synthesizes novel views of complex scenes by optimizing an underlying continuous volumetric scene function using a sparse set of input views. We used this technology to reconstruct Wizeline’s Guadalajara offices based on a short drone video that was originally meant for another purpose.

One of the exciting things about NeRFs is that they give you a volumetric point cloud that is robust to reflections. In contrast, if you use photogrammetry, the reflections or dark surfaces would be interpreted as hollow spaces, but with NeRFs, you can have a concrete reconstruction of such features. Another cool thing about NeRFs is that you can optimize the point cloud and export it as a 3D mesh. In doing so, you lose the reflections but can use the 3D data for other purposes.

Use cases include materials for an architecture studio to show the progress of a building project to their customers or digital twins and the possibility to generate content seamlessly for this scenario.

Consult the Representing Scenes as Neural Radiance Fields for View Synthesis paper for more details about this method.

Stable Diffusion

Stable Diffusion is a set of AI algorithms released in 2022 that enable you to generate depth maps. Its primary use is the generation of detailed images conditioned on text descriptions, inpainting (adding features to an existing image), outpainting (removing features from an existing image), and generating image-to-image translations guided by a text prompt.

For example, the Stable Diffusion WebUI tool creates depth maps, and now also 3D stereo image pairs side-by-side or anaglyph from a single image. The result can be viewed on 3D or holographic devices like VR headsets or Looking Glass displays, used in render or game engines on a plane with a displacement modifier, and maybe even 3D printed.

Figure 1. Depth Map of a 2D Object Created with Stable Diffusion

Object Segmentation

Another use of AI in XR is image segmentation. Meta recently released the Segment Anything Model (SAM) to, in their words, cut out any object in any image with a single click. They have released a demo for the Meta Quest Pro headset, where every object in the room is segmented and classified. This type of algorithm could drive XR systems into context-aware systems.

Currently, XR devices generate 3D reconstructions of the environment, but these reconstructions lack context. The device knows a shape or obstacle is in a specific position but does not know what it is. If we implement SAM in this context, we can classify what that shape is and what surrounds it to establish a better scene understanding. We can go from knowing that we have a desk in front of us to identify its purpose, whether it is for drawing, a workbench, or a PC desk.

Connecting these ideas with digital twins and IoT, you could have a device that can give the user live contextual information, for example, depending on the section of a manufacturing plant it is at. The possibilities are endless!

Human Pose Estimation

Recently, the NBA released a video about the streaming experience of the future, explaining a feature to scan yourself and get you into the court with AI. Some people call these deep fakes, but they are more in the realm of augmented reality with human pose estimation. In 2019 Facebook Research, in collaboration with the Imperial College of London, released DensePose. DensePose can track the user’s position with a Fully Convolutional Network (FCN), and map tracked points to a 3D mesh to understand the body pose without needing a rig. Basically, it maps the 3D mesh UVs to the tracked points.

Additionally, we can use other solutions that track a rig, like DeepPose or DeepCut, to translate elements to more common animation systems. These human pose estimation algorithms can be the next generation of motion capture for movie and video game production. This would enable customers without a budget for a motion capture (MoCap) set to have an affordable and viable solution for 3D animation.

On the same topic of body pose and animation, AI can simulate the behavior of muscles. Three years ago, VIPER (Volume Invariant Position-based Elastic Rods) was published. VIPER enabled virtual characters to understand the muscle groups required to perform tasks such as running, lifting weights, and jumping. In 2022 Meta announced MyoSuite, which went a couple of steps further to understand different groups of muscles and their interactions with other systems.

These AI algorithms have applications in the healthcare industry in rehabilitation, surgery, and shared autonomy, and XR is highly compatible with this. Imagine displaying a prosthesis designed using this kind of AI and being able to visualize it on the patient during the design phase. This technology can also enable better preparation during the preoperational stage of surgery by simulating the behavior of the muscle groups involved in the procedure.

Conclusion

AI is not replacing XR but rather empowering it. We have two highly compatible technologies, and what XR needs to mature and provide value right now is AI, and XR will be, in some cases, what enables a proper UX for AI users. What these two technologies can deliver is boundless, and we haven’t even discussed digital humans yet. Those require an article of their own.

Overall, spatial AI and XR are complementary technologies that can work together to create more sophisticated and immersive applications. As both technologies evolve, we expect more applications leveraging their combined capabilities to develop new and innovative experiences. Here at Wizeline, we have our very own Spatial AI team, and they are experts in applying AI to your spaces. To learn more about how Wizeline delivers customized, scalable data platforms and AI tools, download our guide to AI technologies or connect with us today at consulting@wizeline.com to start the conversation. Let’s build the future of XR together.