Migrating Apache Beam’s GitHub Actions Workflows from GitHub- to Self-Hosted Runners

Executive Summary

The Wizeline team partnered with Google to design and deploy the required infrastructure to migrate a set of GitHub Actions workflows from GitHub-hosted to self-hosted runners for Apache Beam, an important open-source project used by dozens of companies worldwide.

Using GitHub Actions is a great way to set up a workspace for routine tasks like code testing. By adding your own runners, your business will have more control over the budget and hardware requirements for your workflows.

In this case study, we will review how Wizeline helped Apache Beam migrate the execution of some of their GitHub Actions workflows to Google Cloud Platform (GCP) using Google Compute Engine (GCE) virtual machines (VMs), AutoScaling Groups, Google Kubernetes Engine (GKE), a set of Google Cloud Functions, and a GitHub App.

Glossary

Before jumping in, let’s go over a few key terms:

- GitHub Actions: A continuous integration and continuous delivery (CI/CD) platform that allows you to automate your build, test, and deployment pipeline.

- Runners: Machines that execute jobs in a GitHub Actions workflow.

- GitHub-hosted Runner: A new virtual machine hosted by GitHub with the runner application and other tools pre installed.

- Self-hosted Runner: A system that you deploy and manage to execute jobs from GitHub Actions.

The Challenge: Migrate a Set of Workflows from GitHub- to Self-Hosted Runners in GCP for Both Linux and Windows Environments

There is a monthly execution cap on the execution minutes of Actions runners provided by GitHub, as well as a limit on the number of concurrent jobs you can run. Due to these limitations, the project’s CI workflow faced problems like a quota deficit and some procedures being queued for up to an hour.

The stakeholders wanted to solve the previous problems, gain more control options, and customize the testing environments; this infrastructure also needed to be backward compatible with the previous environment and prepared for an upcoming Jenkins jobs migration while maintaining low operational overhead and being cost-effective.

Our Solution: Containerized Linux Self-Hosted Runners

Considering the significant challenges involved and the large number of jobs that would be running in the Ubuntu operating system, we decided to go with a containerized solution, using Kubernetes (K8s) as orchestrator and implementing the Docker in Docker (DinD) approach for running Docker inside the containers.

First, we created a Docker image which included the following:

- Ubuntu 20.04 operating system

- GitHub Actions runner application: actions-runner-linux-x64

- Docker: For the implementation of DinD

- docker-compose-plugin: For workflows that were using docker-compose logic

- google-cloud-cli: For activating the mounted service account

- entrypoint.sh:

- Activate a service account

- Configure Docker

- Get the runner token from a Google Cloud Function

- Configure runner properties

- Run the runner

- Remove runner function

- Trap exit signals for removing runner

Secondly, we deployed a K8s cluster hosted in GKE with its corresponding multiple pool nodes, K8s secrets, and AutoScaling strategies (vertical and horizontal).

Thirdly, we developed a node.js Google Cloud Function for generating a dynamic GitHub token by calling a GitHub App that we had previously created. You may be wondering why a GitHub App instead of a personal access token. We went this route because it’s safer, as we can give granular permissions such as read access to metadata and read and write access to organization self-hosted runners.

Docker in Docker

Let’s talk a little bit about Docker in Docker (DinD), as this was the first time that the team had heard this term. At first, we went with a simple solution, which was passing the docker.sock as volume. It worked for some of the workflows, but as the migration of the workflows moved forward, more complex logic was added into them. For that reason, we reached a point where implementing DinD was no longer optional.

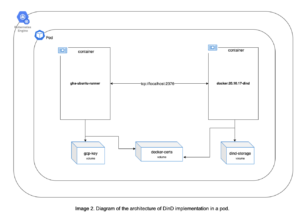

In order to implement DinD, we updated the K8s Deployment by adding another container to the pod using the docker:20.10.17-dind image. In this way, the gha-ubuntu-runner container communicates with the DinD container through tcp://localhost:2376 every time a Docker resource is needed. You can see the details in the following pod diagram:

Windows

For Windows runners, a GCP managed instance group (MIG) was deployed with the runner registration rules configured with instance templates. During the startup, each VM registers itself as an available self-hosted runner with a PowerShell Script, and the runner agent version downloaded is queried from the Release API and updated automatically, if required.

A dedicated service account was assigned to the virtual machines with permission to call a private Cloud Function and obtain a runner registration token that is used during the startup script. Self-hosted runners are configured as ephemeral so that on each workflow execution, the MIG will provide a clean environment with the latest agent version.

To overcome some technical and stability difficulties with the publicly available Windows Docker image and some distribution libraries required for the execution of certain critical tests, the team decided to deploy a group of virtual machines rather than a second K8s cluster for Windows.

In summary, some subcomponents of the Visual Studio (VS) Build Tools module were required by a set of workflows; official images with .NETFramework installed didn’t have the required options by default, and adding those to the Dockerfile proved to be unstable and exceeded the recommended Docker image size by an unreasonable margin. So, in order to keep the testing environment as reliable as possible, the best decision was to implement the Windows environment based on managed instance groups.

Why Self-Hosted Instead of GitHub-Hosted Runners?

There are plenty of advantages of implementing self-hosted runners which every project already working with a cloud provider should consider. First of all, you will improve the cost management of the project, as the GitHub Actions will be free, and the unique cost will be the one generated by the infrastructure in the cloud.

At the same time, the project will have better performance in its GitHub Actions workflows due to the AutoScaling policies that can be attached to the runners, resulting in a reduction of waiting time for the workflows.

Another big advantage is that security can be greatly improved, as the cloud implementation may be hosted in private subnets.

Something worth mentioning is that even if self-hosted runners are customizable to the user’s needs, a pain point is that the user is responsible for updating and maintaining the infrastructure, operating systems, packages, and tools.

In summary, we would suggest going with self-hosted runners when:

- The project has complex workflow logic

- The amount of GitHub Actions runs exceeds the free minutes plan

- The workflows run sensitive information, or you just want to reinforce the project’s security

- The management of the infrastructure is important

- Reducing and/or controlling costs is key, as the runners can be implemented with the same cloud provider as your whole project

To learn more about Wizeline’s Google Cloud capabilities, visit our page on the Google Partner Directory or contact us at consulting@wizeline.com.